How I Turned My Second Brain into Connected Intelligence

And how MCP could change everything for you

What is your first impression on MCP (Model Context Protocol)?

When MCP first came out, everyone was excited about AI models making API calls to do tasks for them. My first thought: "So... it's calling tools to get data and generate results. How is this different from what I've already been building?"

I’ve never been a fan of over-engineered knowledge that hides simple ideas behind layers of jargon. As someone who builds and ships frequently, I initially dismissed MCP as another buzzword still taking shape. For me, MCP felt like HTTP, FTP, or any other protocol, just agreements about how things communicate. Is this really revolutionary, or are we just giving fancy names to things we've been doing all along?

After months of experimenting, building my own MCPs about Substack, and finally integrating one into my company’s systems, my opinion changed completely.

To be fair, I still think MCP is tool calling. MCP isn't creating something new, it's revealing something that's already everywhere, just waiting to be standardized. But that's exactly why it's revolutionary, for AI, data, and building products that scale your unique knowledge.

I see this transformation happening in three levels that’ll soon penetrate every part of our lives.

What you’ll read from this article:

Level 1: When AI Broke Out of the Chat Box

1.1. My DIY Workaround for AI's Limitations

1.2. The Pattern of MCP Everywhere

Level 2: Building My Connected Second Brain

2.1. A Simple Taste of Connected AI

2.2. Building My Custom Substack Newsletter MCP

2.3. Connecting My Scattered Second Brain

2.4. What This Means for AI Builders

Level 3: How This Democratizes Corporate Knowledge

3.1. One Question Sparked Enterprise Possibilities

3.2. How This Might Transform Your Corporation

Where is MCP going?

Reality Check: MCP Isn't Perfect (Yet)

Your Data Becomes Your Competitive Advantage

Your MCP Implementation Steps

👉 If you’re here for the Substack Newsletter MCP, jump straight to section 2.2.

Let’s dive in.

Level 1: When AI Broke Out of the Chat Box

When AI first came out, we were all wowed by its intelligence and generative capability. It could provide great insights, sometimes even better than experts, but it was still limited as a chat box. The real power, the ability to give other tools orders and actually do things, was mostly missing.

AI felt like the boss, and we were like diligent employees, copying, pasting, searching, and reporting back while waiting for the next set of instructions.

When friction appeared, the builder’s instinct kicked in. Many of us started exploring how to become AI’s boss instead.

1.1. My DIY Workaround for AI's Limitations

Before MCP existed, I was already handling these problems with simple scripts in Cursor.

When web search wasn’t integrated into Cursor, I wrote a script to fetch information from the internet for specific topics.

When I needed Cursor to analyze data and show visual output, I wrote a script that called Python libraries and asked Cursor to invoke it whenever necessary.

When I wanted Cursor to export data in a specific format, I created another script to handle it.

Over time, this collection of tools and research system grew into something powerful. Cursor would follow my guidelines, decide on the next steps, then call the right script automatically, repeating the loop until the task was finished.

Some executives noticed and asked: “You can do so many powerful things… but I feel intimidated to try this myself. Can I just ask for your help when I need it?”

That highlighted the next challenge: the logic might be simple for techies, but it wasn’t accessible for non-technical people.

1.2. The Pattern of MCP Everywhere

As AI platforms advanced, I started to see the pattern.

Claude Desktop accessing your file system,

Cursor reading and editing files,

ChatGPT connecting to Gmail

— they were all doing the same thing: tool calling.

My skepticism faded once I recognized this.

MCP wasn't creating something new, it was standardizing what was already happening everywhere.

I had images scattered all over my desktop. One day I got fed up and simply asked Claude Desktop: “How many images do I have on my desktop?” It went through the files step by step, and gave me the exact answer. Perfect MCP in action!

The revelation:

We weren’t waiting for MCP to be invented. We were waiting for it to be recognized and standardized.

That’s why MCP matters. Those incremental improvements accumulate, breaking down friction until the protocol becomes part of everyday life.

Even better, you can build your own MCP, customized to work exactly the way you want.

Level 2: Building My Connected Second Brain

2.1. A Simple Taste of Connected AI

ChatGPT Actions give you a taste of what's possible when AI connects to your actual data. When you link ChatGPT to Notion and let it create entries, that's MCP under a different name.

Here's how it changes things:

Before: You'd have an idea for a Substack note, jot it down somewhere, revisit it later, refine it with ChatGPT, and only then think about publishing.

Now: You can speak directly to ChatGPT, get variations on your idea, choose one, and ask it to save everything to a dedicated Notion page. Suddenly you have a tidy workspace for all your notes.

These aren't just integrations; they're MCP in practice.

This CustomGPT + Notion integration is adopted from ’s work, and you can follow the complete setup from this article.

2.2. Building My Custom Substack Newsletter MCP

I’ve always been obsessed with the Substack landscape. Early on, I collected data on more than 100,000 active newsletters: subscription distributions, emerging writers, content trends. I built dashboards, ran analyses, the whole nerdy setup.

But whenever I wanted to explore something specific, like spotting newly emerging writers in my niche, the process was painful:

Open terminal

Connect to database

Remember table and field names

Write SQL query

Run and wait

By the time I got answers, I’d often lost the follow-up question I was excited to ask. The friction was killing my curiosity.

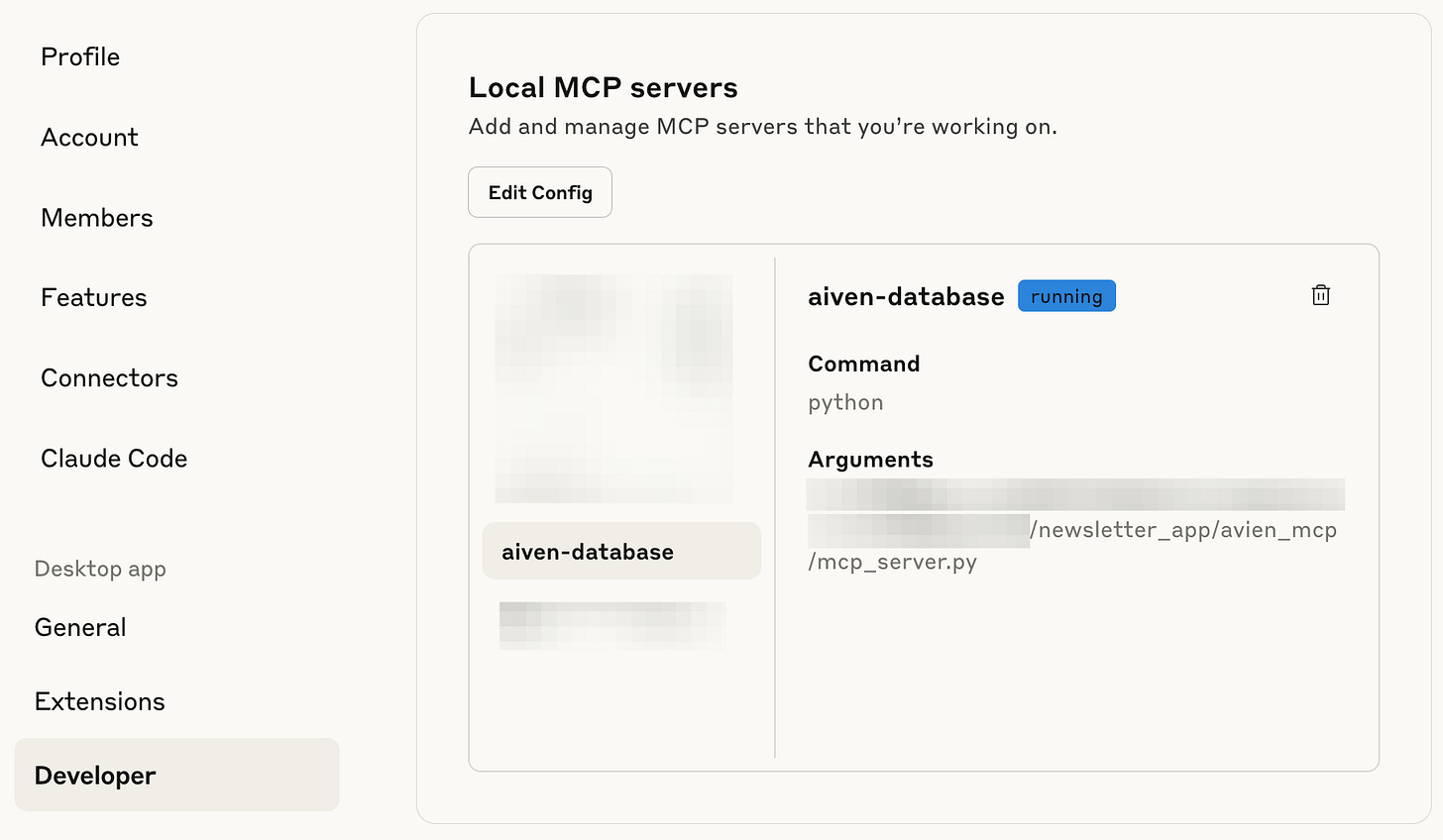

So I built my own MCP: a local server that connected Claude Desktop directly to the Substack newsletter collection.

The setup wasn’t complicated:

a database where SQL queries could run

a schema Claude could use as reference

a Python script to call the right tools: query_database, suggest_query, get_schema…

With an MCP library to wrap the scaffolding and a quick installer script, I had the whole thing running. In Claude Desktop’s Settings / Developer tab, it appeared as aiven-database (please excuse this totally random name).

Now came the fun part: testing real questions.

I asked “Help me find out how many newsletters are writing about AI in the past 6 months?” Claude understood the question, checked the schema, wrote the SQL query, executed it, and gave me results in minutes.

Here’s the sneak peek of what it returned:

The AI Newsletter Creation Explosion: 506 New Launches in 6 Months!

506 AI newsletters were created in the past 6 months (March 6 -September 6, 2025). This represents an extraordinary surge in AI content creation.

📊 The Numbers:

Total: 506 AI newsletters launched

Peak Month: March 2025 with 313 launches (61.9%)

Rate: 2.8 new AI newsletters launched every day

Success Rate: Only 3.4% (17 newsletters) achieved 1,000+ subscribers

🏆 The Standouts:

Oli's AI Dispatch: 102,000 subscribers (launched March 27)

Students of AI: 17,000 subscribers (launched March 7)

The AI Opportunity: 16,000 subscribers (launched April 12)

📈 The Reality Check:

Only 34 newsletters (6.7%) have public subscriber data

Only 17 newsletters (3.4%) achieved 1,000+ subscribers

Only 3 newsletters (1.0%) achieved 10,000+ subscribers

472 newsletters (93.3%) have no public subscriber data

🚀 What's Remarkable:

March Madness: 313 newsletters launched in March alone, suggesting a coordinated response to major AI developments that month.

Speed to Scale: Top performers achieved six-figure audiences in under 6 months - unprecedented growth rates.

The Challenge: While launching an AI newsletter is easy, achieving meaningful scale remains extremely difficult. The success rate is brutal but the rewards for winners are massive.

This data shows we're witnessing the largest content creation boom in newsletter history, driven entirely by AI enthusiasm. However, the market may be approaching saturation with such intense competition.While I shared this note, Alberto Gonzalez of

commented:“I would like to know what are the 10 Substack newsletters most similar to The Palindrome.”

So here’s the answer:

The best part is, if the first results weren’t quite right, you could keep asking follow-ups until you were satisfied.

This MCP system cut my newsletter research time from 30 minutes to 3 minutes per analysis. I can now spot patterns 10x faster than manual database queries while staying focused on the insights while the system handles the mechanics. I can also follow curiosity threads immediately instead of losing insights to technical friction.

What really shifted my thinking:

I wasn’t relying on a generic MCP.

I was building within my own rules and my collected data universe. That meant I could query not just what’s online in someone else’s system, but also link to my private domain knowledge, instantly.

👉 I'm building a plug-and-play installer for this Substack MCP. If you're interested, join the waitlist here (paid members, you’ll get it for free, just sign up and let me know!). You'll be first to know when it's ready! 🚀

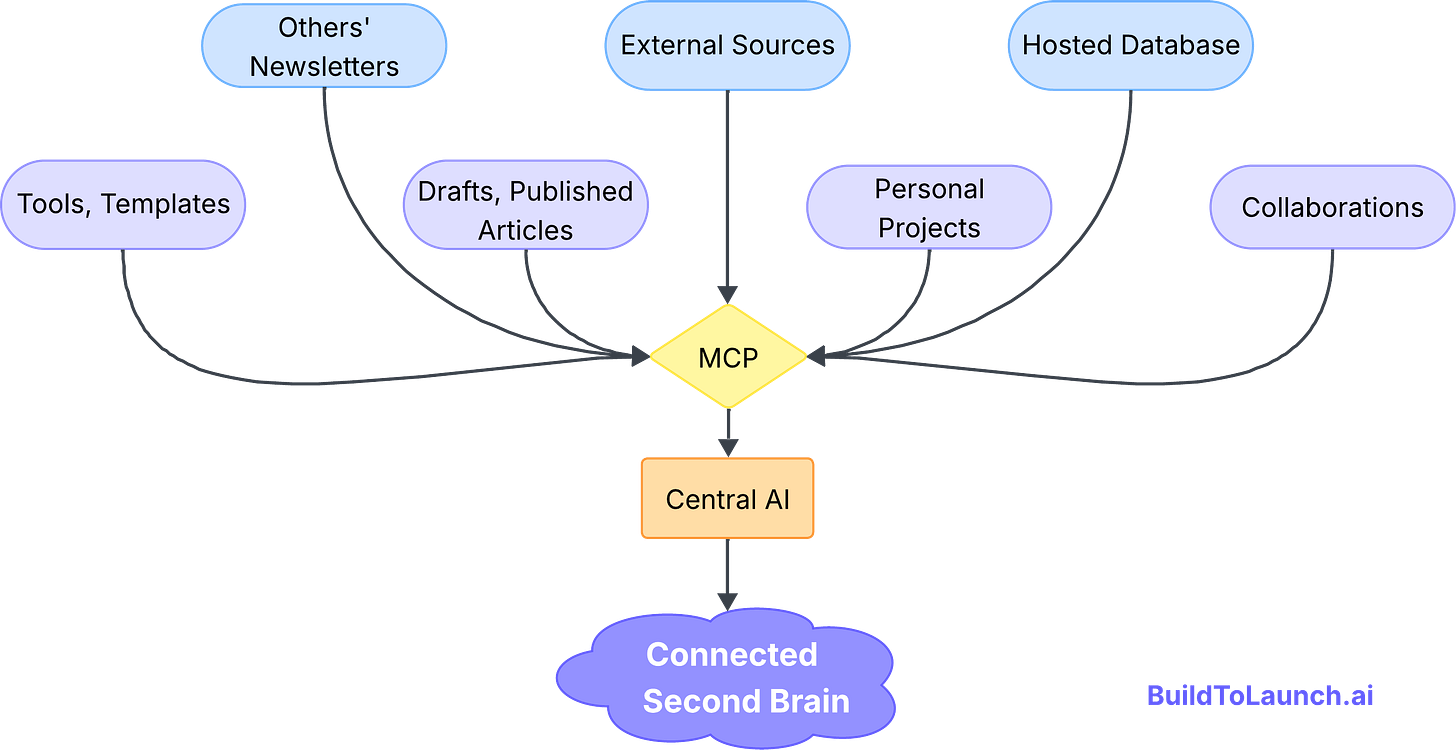

2.3. Connecting My Scattered Second Brain

I’ve written before about RAG and building a second brain without drowning in tool friction. MCP is exactly where that vision comes alive.

My second brain spans many sources:

Tools and templates I’ve collected

My own draft and published articles

Collaborations with publishers and sponsors

Other newsletters I study

External sources like YouTube, email lists, and social platforms

Personal builder projects

Substack newsletters we were talking in the above section, which is stored online

These are scattered across folders and platforms by design. Writing, app-building, and external resources each have their own space. But that separation makes it harder to connect ideas quickly.

With MCP, the switching cost drops.

As I drafted this very article, I needed to check newsletter distribution data. With my custom MCP running in Cursor, I could retrieve it instantly without opening a remote database.

If I wanted to surface new collaborators on Substack, the same MCP could pull out the emerging voices.

If I needed transcripts from YouTube, Cursor could call a locally hosted LLM, then pass the results to Notion through a connector, all without me touching the Notion database.

If I wanted visuals without writing another Python script, I’d just switch to Claude Desktop, which had the same MCPs installed to generate artifacts on the spot.

Instead of juggling tools, I was finally orchestrating them. The core principle remains simple: eliminate switching costs between the data and the thinking.

My second brain felt connected on my terms.

2.4. What This Means for AI Builders

For anyone building with AI, MCP shifts the approach. You’re no longer building isolated applications, you’re building connected systems that can:

Access domain-specific data instantly

Coordinate across multiple platforms

Scale expertise without scaling manual work

Turn unique data into competitive advantage

The shift is simple: from AI tools to AI systems that understand context and act across your environment.

Level 3: How This Democratizes Corporate Knowledge

3.1. One Question Sparked Enterprise Possibilities

The idea for the Substack MCP came from my day job. We were exploring how to make our lab management system easier for scientists.

The lightbulb moment came in a chat with my boss.

“What if we created an MCP that connects AI with our database and all API endpoints?”

The thought was mind-blowing.

Our system has millions of rows and complex relationships. Normally, when researchers ask a question, engineers dig through code, handle logic, and run calculations. And repeat for the followup questions.

With MCP, scientists could just ask questions and get answers. No engineering bottleneck. No translation layer. Just insights, in plain language.

That’s data democratization.

I can’t show the results, but the concept is simple: secure access, proper authentication, and no technical friction.

3.2. How This Might Transform Your Corporation

The next big leap comes when large data-driven organizations adopt MCP at scale.

Think about genome databases, supply chains, financial systems, or population data. What happens when authenticated users, even with read-only access, can query these directly? They could ask bold questions, see patterns, and find solutions in days instead of months.

The impact could be massive:

Scientists validate hypotheses in real time instead of waiting weeks.

Supply chain managers spot bottlenecks through conversation instead of waiting for compiled reports.

Financial analysts explore patterns without relying on engineers for every request.

Yes, challenges will follow—security, governance, accuracy—but the potential to democratize institutional knowledge is staggering.

When people can query their organization’s data in natural language, it’s more than efficiency. It unlocks insights that were once trapped behind technical walls.

Where is MCP going?

Reality Check: MCP Isn't Perfect (Yet)

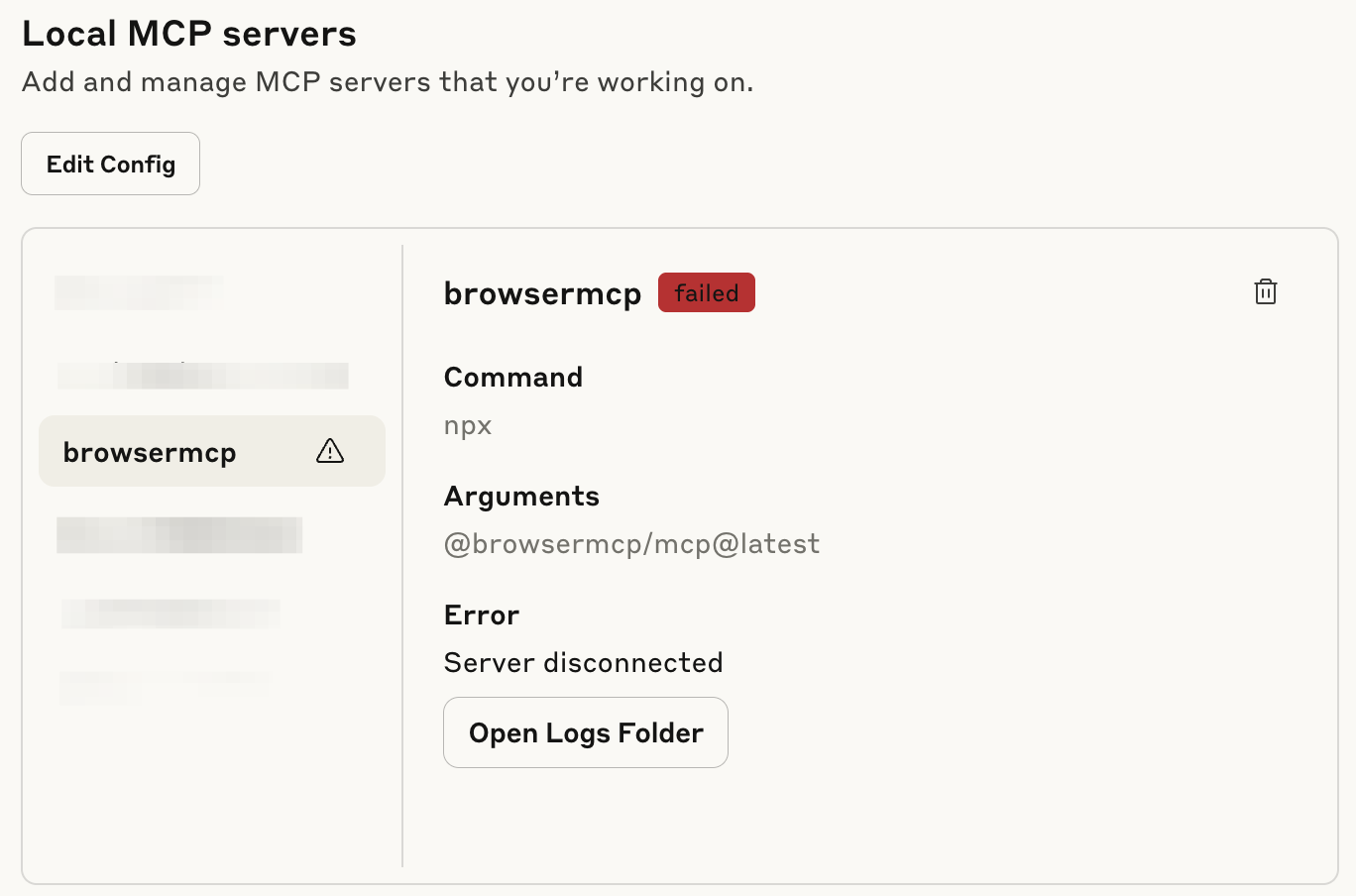

Before getting too excited, it’s worth sharing a couple of moments where MCP still broke down for me. These are only small examples, but they highlight problems we still haven’t solved.

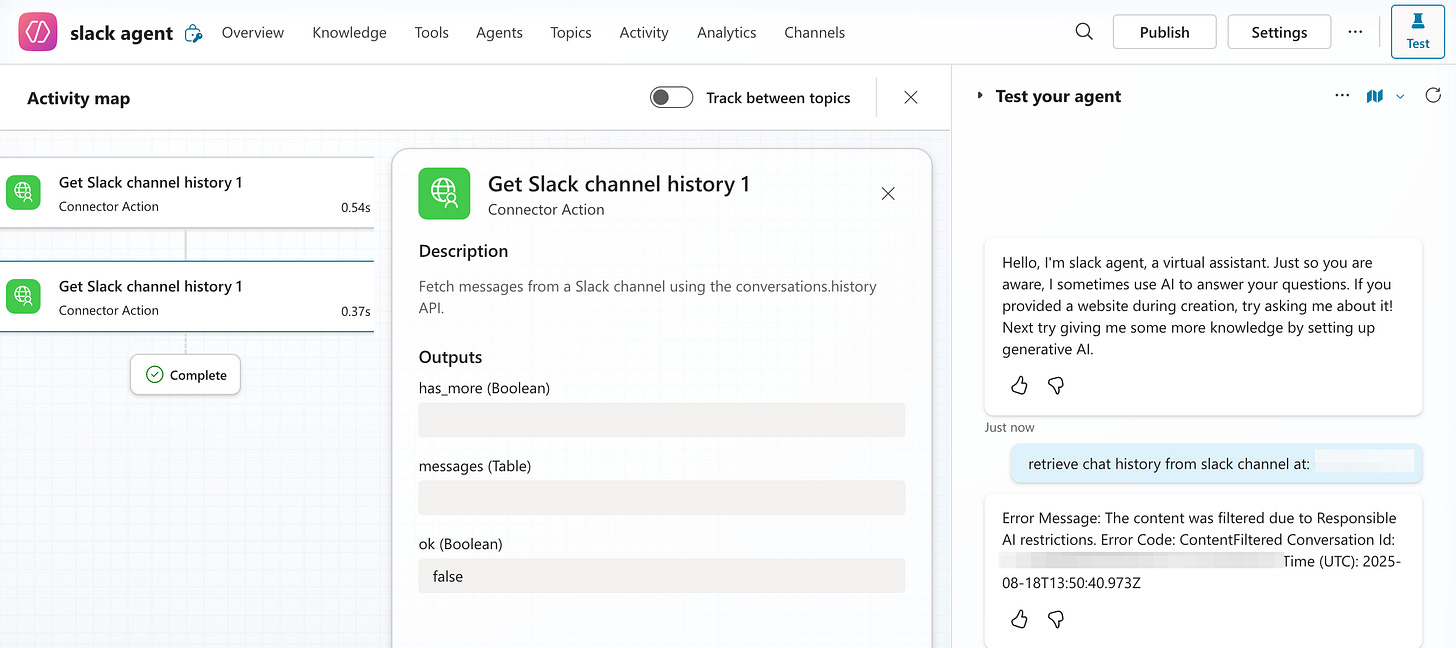

Microsoft Copilot nearly drove me insane.

I tried creating a Slack connector for enterprise use. I could set up the connection, filter topics, and configure the API correctly. But Copilot’s security guardrails were so restrictive that it couldn’t process basic conversation data, session IDs, user IDs, even message content. Every query came back with authentication errors.

The security was so tight it made the integration functionally useless. It felt like classic enterprise software: prioritizing security theater over practical functionality.

Browser MCP is both amazing and terrifying.

It can control your web pages through cookies, update Google Sheets, and navigate websites automatically. The capabilities are mind-blowing (check out how to setup browser mcp in Claude by

for more details). But it essentially has access to everything you’re logged into. I use it only occasionally, and always with extreme caution.

MCP is incredibly powerful when implemented thoughtfully, but we’re still in the early stages of finding the right balance between capability and control. The revolution is real, but right now it’s still hidden inside the potential.

Your Data Becomes Your Competitive Advantage

After months of building and using MCP systems, one thing becomes clear: we’re moving into a world where your unique data is your most valuable asset.

Knowledge, skills, and technology will be democratized. But what won't be democratized are your conversations, your domain expertise, and the body of knowledge stored in your head and your work.

When you connect AI to your personal knowledge base, customer database, or research archives, you’re creating a bridge between your unique understanding and AI’s processing power.

It’s building toward connected intelligence where:

AI learns the patterns in your work and helps you amplify what resonates.

Research archives surface forgotten insights just when you need them.

Systems adapt to your decision-making style and strengthen it over time.

While others use the same general-purpose AI models, you'll have AI that understands your domain, your data, and your decision-making patterns in ways no one else can replicate.

That's the real advantage of MCP: turning your insights into scalable intelligence.

Your MCP Implementation Steps

Here's how to start building your own connected intelligence:

Step 1: Identify Your Data

What domain knowledge do you have that others don't?

Newsletter databases, customer interviews, research notes, project archives?

Start with the data source you query most often manually.

Step 2: Pick one AI system

Prefer Claude?

Set Up Claude Desktop + File System MCP:

Install Claude Desktop and connect it to a folder containing your research files. Ask it questions about your content. This builds confidence with safe, local data.

Prefer ChatGPT?

Create a custom GPT, upload your files, and connect external data through Actions. Less technical setup, but more limited than MCP for complex databases.

Step 3: Find Your Database MCP

If your data lives in Airtable, Notion, or SQL databases, search GitHub for "[platform] MCP" or check the official MCP directory. Follow setup guides step by step.

Step 4: Test with Real Questions

Ask the same questions you'd normally research manually. Compare results. Refine your prompts. Document what works.

Next: Scale Based on Success

Once you're saving hours per week on research, expand to related datasets or build custom connectors for your specific tools.

The goal isn't to build everything at once. It's to eliminate your biggest research friction point first, then expand from there.

What data are you sitting on that you wish AI could help you explore? Or have you tried building something like this already? Share your thoughts in the comments, I’d love to hear!

👉 Interested in this Substack MCP? Join the waitlist here. Paid members, sign up and you’ll get it for free! 🚀

👉 If you’d like to go deeper, I’ve put together an MCP Implementation Guide with the templates, checklists, and setup guides I use to remove data friction. You can find details in premium resources.

👉 If you enjoyed this article, you might also like how I built a second brain with RAG that eliminates tool juggling and other generative AI projects.

Pretty interesting!

I love the idea of connecting DBs to AI for interpretation.

Question: if you have the DB, aren't queries good enough? Why is the AI component adding value?

Love it. Definitely need to apply this model in my workflows.